本文介绍Hypernetes与Kubernetes之间的关系

Hypernetes相关链接

- Hypernetes的卖点

- github地址

- 基于 HyperContainer 和 Hypernetes 项目的公有云:Hyper_

- 知乎话题:如何评价 hyper_

- Secure NFV (Clearwater) deployment on Kubernetes (Hypernetes) in 10 min

- 项目拥有者博客

- 将Hypernetes整合至Kubernetes带来安全性与多租户机制

- Hypernetes安装日志

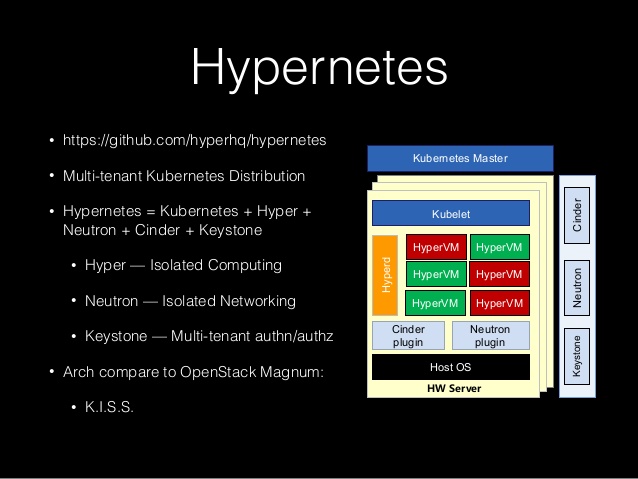

Hypernetes简介

Hypernetes在Kubernetes基础上增加了多租户认证授权、容器SDN网络、基于Hyper的容器执行引擎以及基于Cinder的持久化存储等。

基本上Hypernetes = Bare-metal + Hyper + Kubernetes + Cinder(Ceph) + Neutron + Keystone

在介绍Hypernetes细节之前先首先提一下相关背景,也就是Kubernetes的多租户支持情况。

Kubernetes在多租户方面的支持还是比较少的,没有“租户”这一概念,也只有namespace提供了一个逻辑的资源池(可以给这个namespace设定一些资源的配额),并且它在认证授权、网络、Container Runtime等方面离真正的多租户还都比较远。

认证方面,虽然支持client certificates,tokens,http basic auth等,但没有用户管理的功能。如果想要新建用户,需要手动修改配置文件并重启服务。最新版本增加了Keystone的认证,可以借助Keystone来管理用户。

授权方面,目前只有AlwaysDeny ,AlwaysAllow以及ABAC模式。ABAC模式根据一个策略文件来配置不同用户的权限,比如限定用户只能访问特定的namespace等,但对于新创建的namespace等资源需要重复修改策略文件。

Kubernetes要求cluster内部所有的容器之间是全通的,而多租户情况下需要不同租户之前网络是隔离的。

Docker的安全性问题,跟虚拟化还是有不少距离。

正是由于上面这些原因,很多公司都在虚拟机里面来跑Kubernetes,比如Google Container Engine、OpenStack Magnum等。

在虚拟机内部跑容器虽然提升了安全性,但也引入了一些问题,比如容器的网络不能通过IaaS层来统一管理,容器无法直接使用IaaS层的持久化存储,无法集中调度所有容器的资源等。

先来看一下Hypernetes的架构图

Hypernetes在Kubernetes基础上增加了一些组件

增加了Tenant的概念,不同Tenant之间的网络和资源(ns, pod, svc, rc等)是隔离的。这些租户通过keystone管理,并提供认证和授权

通过Neutron给不同租户提供二层隔离的网络,并支持Neutron的各种插件(目前主要是ovs)

通过Hyper提供基于虚拟化的容器执行引擎,保证容器的隔离

还有通过Cinder给容器引入各种持久化存储(目前主要是ceph)

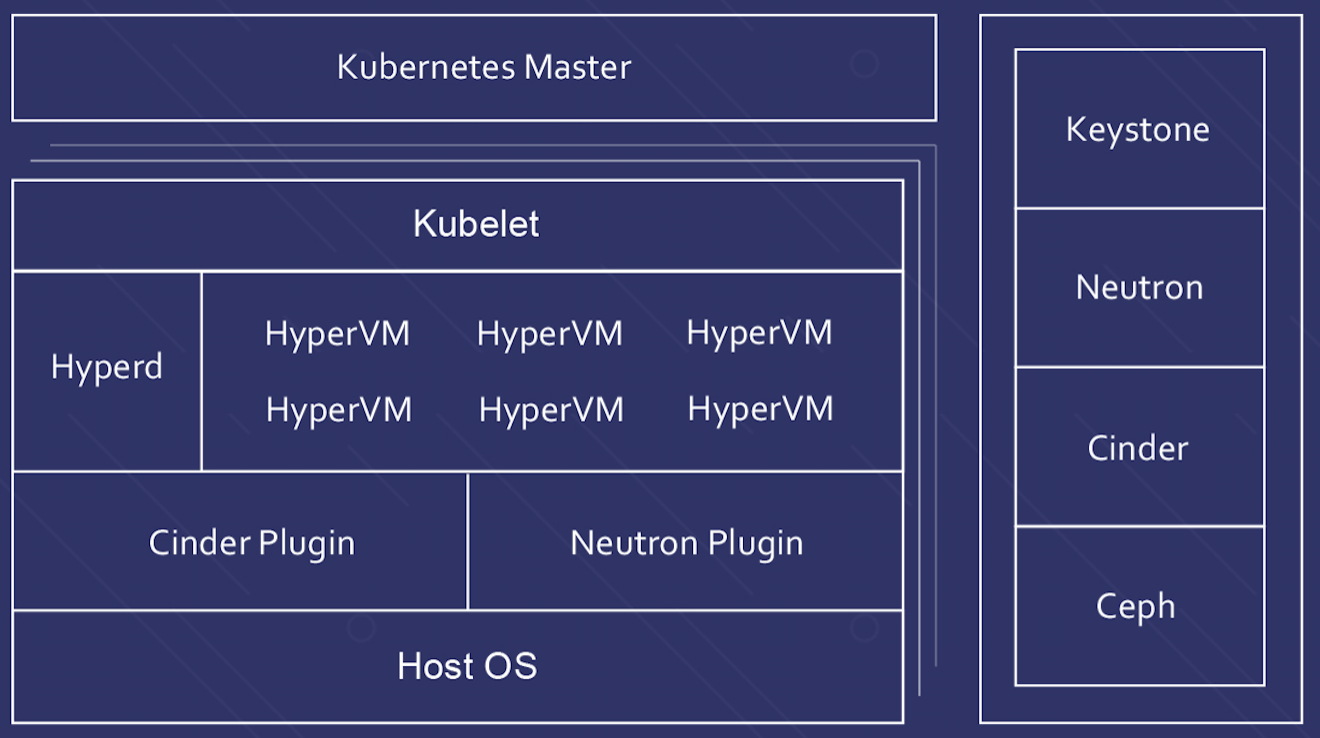

具体到Hypernetes内部,详细的架构是这样的

为了支持多租户,Hypernetes基于Kubernetes增加了很多组件,这些组件都是以Plugin的形式提供的。

这样非常方便扩展,也很容易将Hypernetes与现有的IaaS在同一个基础架构上运行起来

比如,如果你不喜欢Neutron,可以把它替换成odl等。

与Kubernetes的关系

The hypervisor-based container runtime for Kubernetes,通过Hyper提供基于虚拟化的容器执行引擎,保证容器的隔离,并将其贡献在kubernetes组织下:frakti,再通过CRI(Container Runtime Interface),原生接入Kubernetes,类似CoreOS公司的rkt接入kubernetes。 在Hypernetes v1.6版本中,Hypernetes的容器运行时也将从一套名为runV的OCI标准容器运行时切换到frakti。

Hypernetes 是从Kubernetes项目中fork出来的。为了支持多租户,Hypernetes基于Kubernetes增加了很多组件,这些组件都是以Plugin的形式提供的。

关于虚拟化和容器化的思考,可以参见项目拥有者的一篇报告Re-Think of Virtualization and Containerization

Hypernetes 借助Kubernetes中的RBAC(Role-Based Access Control)来实现接入控制,具体方式如下图所示

userA和userB是通过[role_binding1][role1]连接到name space 1,得到在name space1进行操作的权限。userB则是[role_binding2]->[role2]得到在name space2进行操作的权限。

Proposal of hypernetes1.6 auth

Continue to use keystone, Keystone authentication is enabled by passing the –experimental-keystone-url=

option to the API server during startup Use RBAC for authorization,enable the authorization module with –authorization-mode=RBAC

Add auth-controller to manage RBAC policy

auth workflow:

kubectl apiserver keystone rbac auth+controller

+ + + + +

| 1 | | | |

+-----+request+---------> | | |

| | 2 | <--+update policy++

| +----+Authentication+-----> | |

| | 3 | | |

| <---+user.info,success+---+ | |

| | 4 + | |

| +-------------------+Authorization+------------> |

| | 5 + | |

| <--------------------+success+-----------------+ |

| 6 | + | |

<-----+response+--------+ | | |

| | | | |

+ + + + +

To update rbac policy, auth-controller need to get user-info. Auth-controller update

ClusterRoleandRoleBindingto get permissions of namespaces, when user is updated. May be we should add user as a kind of resource.Watch

Namespaceand update the permissions of namespace scoped resources for regular user.

- kubectl send request with (username, password) to apiserver

- Apiserver receive request from kubectl, and call AuthenticatePassword(username, password) to check (username, password) via keystone.

- If check successfully return (user.info, true), continue to step4. if check fail return (user.info, fales), request fail.

- call Authorize(authorizer.Attributes) to check RBAC roles and binding.

- if check success return true and execute operation of the request. if check fail return false ,request fail.

- return request result

Add user

- Use Third Party Resources to create user resource :

apiVersion: extensions/v1beta1

kind: ThirdPartyResource

metadata:

name: user.stable.example.com

description: "A specification of a User"

versions:

- name: v1

- Add a user object. May be there more detail user information will be set using custom fields like

username. At least username are required.

apiVersion: "stable.example.com/v1"

kind: User

metadata:

name: alice

username: "alice chen"

auth-controller

auth-controller is responsible of updating RBAC roles and binding when

- new user is created: allow the user to create/get namespace

- new namespace is created/deleted

- network is created/deleted

- user is removed: delete all user related data

auth -controller will watch resources including User, NameSpace, Network and update RBAC roles and binding using superuser. First create ClusterRole for regular user to get permissions of NameSpace:

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1alpha1

metadata:

# "namespace" omitted since ClusterRoles are not namespaced.

name: namespace-creater

rules:

- apiGroups: [""]

resources: ["namespace"]

verbs: ["get", "create"] # I think verbs should include "delete",user have permission of deleting their namespace

new user are created:

create ClusterRoleBinding for new regular user reference namespace-creater role:

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1alpha1

metadata:

name: username-namespace-creater

subjects:

- kind: User

name: username

roleRef:

kind: ClusterRole

name: namespace-creater

apiGroup: rbac.authorization.k8s.io

a new namespace are created:

create Role namespaced:

kind: Role

apiVersion: rbac.authorization.k8s.io/v1alpha1

metadata:

namespace: namespace

name: access-resources-within-namespace

rules:

- apiGroups: [""]

resources: [ResourceAll]

verbs: [VerbAll]

create Rolebinding reference to role namespace:

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1alpha1

metadata:

name: namespace-binding

namespace: namespace

subjects:

- kind: User

name: username

roleRef:

kind: Role

name: access-resources-within-namespace

apiGroup: rbac.authorization.k8s.io

role and rolebinding will be removed when namespace are deleted

a network is created/deleted

Network will be defined non-namespaced resource. we can set permission of Network in ClusterRole when user are created.

a user is deleted:

ClusterRoleBinding related the user will be removed at least.

文档信息

- 本文作者:Yu Peng

- 本文链接:https://www.y2p.cc/2017/06/05/hypernetes-and-kubernetes/

- 版权声明:自由转载-非商用-非衍生-保持署名(创意共享3.0许可证)